Machine Learning and Laboratory Medicine: Now and the Road Ahead

|

By Thomas J.S. Durant, MD Posted on 29 Jul 2019 |

(Photo courtesy of AACC)

(Photo courtesy of AACC)

As the demand for healthcare continues to grow exponentially, so does the volume of laboratory testing. Similar to other sectors, research in the field of laboratory medicine has begun to investigate the use of machine learning (ML) to ease the burden of increasing demand for services and to improve quality and safety.

Over the past decade, the statistical performance of ML on benchmark tasks has improved significantly due to increased availability of high-speed computing on graphic processing units, integration of convolutional neural networks, optimization of deep learning, and ever larger datasets (2). The details of these achievements are beyond the scope of this article.

However, the emerging consensus is that the general performance of supervised ML—algorithms which rely on labeled datasets—has reached a tipping point where clinical laboratorians should seriously consider enterprise-level, mission-critical applications (Table 1).

In recent years, research publications related to ML have increased significantly in pathology and laboratory medicine (Figure 1). However, despite recent strides in technology and the growing body of literature, few examples exist of ML implemented into routine clinical practice. In fact, some of the more prominent examples of ML in current practice were developed prior to the recent inflection in ML-related publications (3).

This underscores the possibility that despite technological advancements, progress in ML remains slow due to intrinsic limitations of available datasets, the state of ML technology itself, and other barriers.

As laboratory medicine continues to undergo digitalization and automation, clinical laboratorians will likely be confronted with the challenges associated with evaluating, implementing, and validating ML algorithms, both inside and outside their laboratories. Understanding what ML is good for, where it can be applied, and the ML field’s state-of-the-art and limitations will be useful to practicing laboratory professionals. This article discusses current implementations of ML technology in modern clinical laboratory workflows as well as potential barriers to aligning the two historically distant fields.

WHERE IS THE MACHINE LEARNING?

As ML continues to be adopted and integrated into the complex infrastructure of health information systems (HIS), how ML may influence laboratory medicine practice remains an open question. In particular, it is important to consider barriers to implementation and identify stakeholders for governance, development, validation, and maintenance. However, clinical laboratorians should first consider the context: is the ML application inside or downstream from a laboratory?

Machine Learning Inside Labs

Currently, there are just a handful of Food and Drug Administration (FDA)-cleared, ML-based commercial products available for clinical laboratories. The Cellavision DM96, marketed by Cellavision AB (based in Lund, Sweden), is a prominent example that has been adopted widely

since gaining FDA clearance in 2001 (3). More recently, the Accelerate Pheno, marketed by Accelerate Diagnostics in Tucson, Arizona, uses a hierarchical system that combines multivariate logarithmic regression and computer vision (4,5). Both systems rely heavily on digital image acquisition and analysis to generate their results.

The recent appearance of FDA-cleared instruments that process digital images is not surprising considering current advancements in computer science, especially significant strides researchers have made with image-based data. Robust ML methods such as image convolution, neural networks, and deep learning have accelerated the performance of image-based ML in recent years (2). Digital images, however, are not as abundant in clinical laboratories as they are in other diagnostic specialties, such as radiology or anatomic pathology, possibly limiting future applications of image-based ML in laboratory medicine.

Beyond the limited number of commercial applications, ML research in laboratory medicine also has been growing, although the total number of publications remains relatively low. In recent years, researchers have investigated the utility of ML for a broad array of datasets, such as analyzing erythrocyte morphology, bacterial colony morphology, thyroid panels, urine steroid profiles, flow cytometry, and to review test result reports for quality assurance (6-11).

While some institutions have successfully integrated homegrown ML systems into their workflows, few have successfully transitioned to clinical practice. Despite the development of better performing models, researchers for a variety of reasons often find difficulty with the proverbial last mile of clinical integration. In particular, the literature offers little to no guidance on statistical performance metrics by which to evaluate ML models, the design of clinical validation experiments, or on how to create more modular ML models that integrate with current laboratory medicine information technology (IT) infrastructures and workflows.

In all likelihood, the reason for clinical laboratories’ slow adoption of ML, both from commercial and research sources, is multifactorial, and arguably emanates from more than just the intrinsic limitations of the core technology itself. Similar to other technologies that receive a lot of attention, such as “big data” or blockchain, ML remains a tool that requires a supportive system architecture. While the core technology is demonstrating promising results, its prevalence in daily practice is likely to remain limited until developers and software engineers offer clinical IT systems that allow easy integration with existing workflows.

Machine Learning Outside Labs

As electronic health records (EHR) continue to evolve and accumulate more data, commercial EHR vendors are looking to expand their data access and analytic capabilities. They have begun offering ML models designed for use within their systems and in some cases are allowing access to third-party models. Vendors often package ML software into clinical decision support (CDS), an increasingly popular location for blending ML and clinical medicine.

While CDS tools traditionally rely on rule-based systems, vendors now are using ML in predictive alarms and syndrome surveillance tools, aimed at assisting clinical decision makers in complex scenarios.

In their current state, ML algorithms usually rely on structured data for training and subsequently generating predictions. While a significant portion of EHRs contains unstructured and semistructured data, laboratory information remains one of the largest sources for structured data, and it is not uncommon for ML-based CDS tools to rely heavily on laboratory data as input. As CDS tools proliferate, the role of laboratory medicine in developing, validating, and maintaining these models remains important yet poorly defined.

In addition, similar to calculated laboratory results such as estimated glomerular filtration rate, probability scores generated by ML models that rely on laboratory data could arguably be subject to regulation by traditional governing and accrediting organizations such as College of American Pathologists, FDA, or the Joint Commission.

While the regulation of ML remains an openly debated topic in the field of computer science, the growing consensus among experts in the medical community is that rigorous oversight of these models is appropriate to ensure their safety and reliability in clinical medicine.

In 2017, FDA released draft guidance on CDS software in an attempt to provide clarity on the scope of its regulatory oversight (12). While these guidelines are still subject to change, it’s clear the agency is committed to oversight in this area. Until guidelines are formalized, subjecting ML models to the rigor of the peer-reviewed process may be the next best thing.

To deliver promising ML technology at the bedside, the IT and medical communities may need to collaborate with ML researchers and vendors to support validation studies. Clinical laboratorians may be particularly suited for guiding these types of efforts, owing not only to ML models’ frequent reliance on laboratory data but also laboratorians’ expertise in validating new technology for clinical purposes.

As ML in the post-analytic phase propagates, clinical laboratorians will need to become increasingly attentive to which laboratory data are being used and how. For example, changes within laboratory information systems may have unintended consequences on downstream applications that rely on properly mapped laboratory result data. Health systems will also benefit from clinical laboratorians’ insight on how ML can improve patient care using laboratory data.

BARRIERS TO DEVELOPMENT AND ADOPTION

Three categories generally describe common approaches to ML: supervised, semisupervised, and unsupervised. Supervised ML relies on a large, accurately labeled dataset to train an ML model, such as labeling images of leukocytes as lymphocytes or neutrophils for subsequent classification. Currently, the consensus is that supervised ML will generate the best models for targeted detection of known classes of data. But in many cases the data sets required are not large enough or labeled accurately enough. However, the process of curating accurately labeled datasets is difficult and time-consuming.

With EHRs, researchers certainly have greater access to data than in years past. However, health information in its native state often is insufficiently structured for the rigorous development of ML models. For example, predictive alarms and syndrome surveillance tools that use supervised ML often rely on datasets delineated by the presence or absence of clinical disease. While ICD-10 codes are a discrete data element that could be used for labeling purposes, experience at our institution indicates that ICD-10 codes are not documented reliably enough to train supervised ML models.

To avoid performance issues associated with inconsistent labels, data scientists can curate custom labels based on specific criteria to define the classes in their datasets. But criteria for defining classes are often subjective and may lack universal acceptance. For example, sepsis prediction algorithms may rely on clinical criteria of sepsis used at one institution but not another. It will become increasingly important for clinical laboratorians to consider how models are trained and which specific clinical definitions define the functional ground-truth in an ML model for the classes or disease being detected.

In addition to issues with variable criteria for clinical disease, some labels also have intrinsic variability that may preclude ML from optimal performance across institutions. Linear models such as logistic and linear regression have shown poor generalizability between institutions (13,14). In healthcare, the problem is multifactorial and may result from population heterogeneity, or from discrepancies between the ML training population and the use case or test population. Consequently, ML models trained outside one’s institution may benefit from retraining before go-live. However, nothing in the literature supports this practice.

Lastly, the black box nature of ML models themselves poses a well-described barrier to adoption. Computer scientists have sought to elucidate how and why models arrive at the answers they generate in order to demonstrate to end users the decision points used to arrive at a given score or classification, often referred to as explainable artificial intelligence (XAI).

Proponents for XAI argue that it may help investigate the source of bias in an ML model in a scenario where a model is producing erroneous results. Ideally such a tool would also include interactive features to allow correction of the bias identified. However, as ML models become more powerful and complex, the ability to derive meaningful insight into their inner logic becomes more difficult. The practice of investigating methods for XAI is young, and its utility remains an open question.

WHAT’S NEXT FOR MACHINE LEARNING?

The powerful technology of ML offers significant potential to improve the quality of services provided by laboratory medicine. Early commercial and research-driven applications have demonstrated promising results with ML-based applications in our field. Despite nagging problems with model generalizability, oversight, and physician adoption, we should expect a steady influx of ML-based technology into laboratory medicine in the coming years.

Laboratory medicine professionals will need to understand what can be done reliably with the technology, what the pitfalls are, and to establish what constitutes best practices as we introduce ML models into clinical workflows.

Thomas J.S. Durant, MD, is a clinical fellow and resident physician in the department of laboratory medicine at Yale University School of Medicine in New Haven, Connecticut. +Email: thomas.durant@yale.edu

At the 71st AACC Annual Scientific Meeting & Clinical Lab Expo, scientific sessions cover a wide array of dynamic areas of clinical laboratory medicine. For sessions related to this and other Data Analytics topics visit https://2019aacc.org/conference-program.

Over the past decade, the statistical performance of ML on benchmark tasks has improved significantly due to increased availability of high-speed computing on graphic processing units, integration of convolutional neural networks, optimization of deep learning, and ever larger datasets (2). The details of these achievements are beyond the scope of this article.

However, the emerging consensus is that the general performance of supervised ML—algorithms which rely on labeled datasets—has reached a tipping point where clinical laboratorians should seriously consider enterprise-level, mission-critical applications (Table 1).

In recent years, research publications related to ML have increased significantly in pathology and laboratory medicine (Figure 1). However, despite recent strides in technology and the growing body of literature, few examples exist of ML implemented into routine clinical practice. In fact, some of the more prominent examples of ML in current practice were developed prior to the recent inflection in ML-related publications (3).

This underscores the possibility that despite technological advancements, progress in ML remains slow due to intrinsic limitations of available datasets, the state of ML technology itself, and other barriers.

As laboratory medicine continues to undergo digitalization and automation, clinical laboratorians will likely be confronted with the challenges associated with evaluating, implementing, and validating ML algorithms, both inside and outside their laboratories. Understanding what ML is good for, where it can be applied, and the ML field’s state-of-the-art and limitations will be useful to practicing laboratory professionals. This article discusses current implementations of ML technology in modern clinical laboratory workflows as well as potential barriers to aligning the two historically distant fields.

WHERE IS THE MACHINE LEARNING?

As ML continues to be adopted and integrated into the complex infrastructure of health information systems (HIS), how ML may influence laboratory medicine practice remains an open question. In particular, it is important to consider barriers to implementation and identify stakeholders for governance, development, validation, and maintenance. However, clinical laboratorians should first consider the context: is the ML application inside or downstream from a laboratory?

Machine Learning Inside Labs

Currently, there are just a handful of Food and Drug Administration (FDA)-cleared, ML-based commercial products available for clinical laboratories. The Cellavision DM96, marketed by Cellavision AB (based in Lund, Sweden), is a prominent example that has been adopted widely

since gaining FDA clearance in 2001 (3). More recently, the Accelerate Pheno, marketed by Accelerate Diagnostics in Tucson, Arizona, uses a hierarchical system that combines multivariate logarithmic regression and computer vision (4,5). Both systems rely heavily on digital image acquisition and analysis to generate their results.

The recent appearance of FDA-cleared instruments that process digital images is not surprising considering current advancements in computer science, especially significant strides researchers have made with image-based data. Robust ML methods such as image convolution, neural networks, and deep learning have accelerated the performance of image-based ML in recent years (2). Digital images, however, are not as abundant in clinical laboratories as they are in other diagnostic specialties, such as radiology or anatomic pathology, possibly limiting future applications of image-based ML in laboratory medicine.

Beyond the limited number of commercial applications, ML research in laboratory medicine also has been growing, although the total number of publications remains relatively low. In recent years, researchers have investigated the utility of ML for a broad array of datasets, such as analyzing erythrocyte morphology, bacterial colony morphology, thyroid panels, urine steroid profiles, flow cytometry, and to review test result reports for quality assurance (6-11).

While some institutions have successfully integrated homegrown ML systems into their workflows, few have successfully transitioned to clinical practice. Despite the development of better performing models, researchers for a variety of reasons often find difficulty with the proverbial last mile of clinical integration. In particular, the literature offers little to no guidance on statistical performance metrics by which to evaluate ML models, the design of clinical validation experiments, or on how to create more modular ML models that integrate with current laboratory medicine information technology (IT) infrastructures and workflows.

In all likelihood, the reason for clinical laboratories’ slow adoption of ML, both from commercial and research sources, is multifactorial, and arguably emanates from more than just the intrinsic limitations of the core technology itself. Similar to other technologies that receive a lot of attention, such as “big data” or blockchain, ML remains a tool that requires a supportive system architecture. While the core technology is demonstrating promising results, its prevalence in daily practice is likely to remain limited until developers and software engineers offer clinical IT systems that allow easy integration with existing workflows.

Machine Learning Outside Labs

As electronic health records (EHR) continue to evolve and accumulate more data, commercial EHR vendors are looking to expand their data access and analytic capabilities. They have begun offering ML models designed for use within their systems and in some cases are allowing access to third-party models. Vendors often package ML software into clinical decision support (CDS), an increasingly popular location for blending ML and clinical medicine.

While CDS tools traditionally rely on rule-based systems, vendors now are using ML in predictive alarms and syndrome surveillance tools, aimed at assisting clinical decision makers in complex scenarios.

In their current state, ML algorithms usually rely on structured data for training and subsequently generating predictions. While a significant portion of EHRs contains unstructured and semistructured data, laboratory information remains one of the largest sources for structured data, and it is not uncommon for ML-based CDS tools to rely heavily on laboratory data as input. As CDS tools proliferate, the role of laboratory medicine in developing, validating, and maintaining these models remains important yet poorly defined.

In addition, similar to calculated laboratory results such as estimated glomerular filtration rate, probability scores generated by ML models that rely on laboratory data could arguably be subject to regulation by traditional governing and accrediting organizations such as College of American Pathologists, FDA, or the Joint Commission.

While the regulation of ML remains an openly debated topic in the field of computer science, the growing consensus among experts in the medical community is that rigorous oversight of these models is appropriate to ensure their safety and reliability in clinical medicine.

In 2017, FDA released draft guidance on CDS software in an attempt to provide clarity on the scope of its regulatory oversight (12). While these guidelines are still subject to change, it’s clear the agency is committed to oversight in this area. Until guidelines are formalized, subjecting ML models to the rigor of the peer-reviewed process may be the next best thing.

To deliver promising ML technology at the bedside, the IT and medical communities may need to collaborate with ML researchers and vendors to support validation studies. Clinical laboratorians may be particularly suited for guiding these types of efforts, owing not only to ML models’ frequent reliance on laboratory data but also laboratorians’ expertise in validating new technology for clinical purposes.

As ML in the post-analytic phase propagates, clinical laboratorians will need to become increasingly attentive to which laboratory data are being used and how. For example, changes within laboratory information systems may have unintended consequences on downstream applications that rely on properly mapped laboratory result data. Health systems will also benefit from clinical laboratorians’ insight on how ML can improve patient care using laboratory data.

BARRIERS TO DEVELOPMENT AND ADOPTION

Three categories generally describe common approaches to ML: supervised, semisupervised, and unsupervised. Supervised ML relies on a large, accurately labeled dataset to train an ML model, such as labeling images of leukocytes as lymphocytes or neutrophils for subsequent classification. Currently, the consensus is that supervised ML will generate the best models for targeted detection of known classes of data. But in many cases the data sets required are not large enough or labeled accurately enough. However, the process of curating accurately labeled datasets is difficult and time-consuming.

With EHRs, researchers certainly have greater access to data than in years past. However, health information in its native state often is insufficiently structured for the rigorous development of ML models. For example, predictive alarms and syndrome surveillance tools that use supervised ML often rely on datasets delineated by the presence or absence of clinical disease. While ICD-10 codes are a discrete data element that could be used for labeling purposes, experience at our institution indicates that ICD-10 codes are not documented reliably enough to train supervised ML models.

To avoid performance issues associated with inconsistent labels, data scientists can curate custom labels based on specific criteria to define the classes in their datasets. But criteria for defining classes are often subjective and may lack universal acceptance. For example, sepsis prediction algorithms may rely on clinical criteria of sepsis used at one institution but not another. It will become increasingly important for clinical laboratorians to consider how models are trained and which specific clinical definitions define the functional ground-truth in an ML model for the classes or disease being detected.

In addition to issues with variable criteria for clinical disease, some labels also have intrinsic variability that may preclude ML from optimal performance across institutions. Linear models such as logistic and linear regression have shown poor generalizability between institutions (13,14). In healthcare, the problem is multifactorial and may result from population heterogeneity, or from discrepancies between the ML training population and the use case or test population. Consequently, ML models trained outside one’s institution may benefit from retraining before go-live. However, nothing in the literature supports this practice.

Lastly, the black box nature of ML models themselves poses a well-described barrier to adoption. Computer scientists have sought to elucidate how and why models arrive at the answers they generate in order to demonstrate to end users the decision points used to arrive at a given score or classification, often referred to as explainable artificial intelligence (XAI).

Proponents for XAI argue that it may help investigate the source of bias in an ML model in a scenario where a model is producing erroneous results. Ideally such a tool would also include interactive features to allow correction of the bias identified. However, as ML models become more powerful and complex, the ability to derive meaningful insight into their inner logic becomes more difficult. The practice of investigating methods for XAI is young, and its utility remains an open question.

WHAT’S NEXT FOR MACHINE LEARNING?

The powerful technology of ML offers significant potential to improve the quality of services provided by laboratory medicine. Early commercial and research-driven applications have demonstrated promising results with ML-based applications in our field. Despite nagging problems with model generalizability, oversight, and physician adoption, we should expect a steady influx of ML-based technology into laboratory medicine in the coming years.

Laboratory medicine professionals will need to understand what can be done reliably with the technology, what the pitfalls are, and to establish what constitutes best practices as we introduce ML models into clinical workflows.

Thomas J.S. Durant, MD, is a clinical fellow and resident physician in the department of laboratory medicine at Yale University School of Medicine in New Haven, Connecticut. +Email: thomas.durant@yale.edu

At the 71st AACC Annual Scientific Meeting & Clinical Lab Expo, scientific sessions cover a wide array of dynamic areas of clinical laboratory medicine. For sessions related to this and other Data Analytics topics visit https://2019aacc.org/conference-program.

Latest AACC 2019 News

- Instrumentation Laboratory Presents New IVD Testing System

- Quidel Welcomes Newest Member of Triage Family

- ERBA Mannheim Unveils Next-Generation Automation

- Roche Demonstrates How Health Networks Are Driving Change in Labs and Beyond

- BioMérieux Spotlights Diagnostic Solutions in Use of Antibiotics

- Thermo Shows New Clinical Innovations

- Randox Launches New Innovations

- Streck Introduces Three New Antibiotic Resistance Detection Kits

- EKF Diagnostics Highlights Assay for Diabetes Patient Monitoring

- Sysmex America Exhibits New Products, Automation and Quality Solutions

- BBI Solutions Showcases Mobile Solutions Capabilities at AACC 2019

- MedTest Dx Releases New Product Line for Drugs of Abuse Testing

- Mesa Biotech Launches Molecular Test System at AACC 2019

- Ortho Clinical Diagnostics Highlights Groundbreaking Lab Technology

- Abbott Diagnostics Exhibits POC Diagnostics Solutions at AACC

- Beckman Coulter Demonstrates Latest Innovations in Lab Medicine

Channels

Clinical Chemistry

view channel

New PSA-Based Prognostic Model Improves Prostate Cancer Risk Assessment

Prostate cancer is the second-leading cause of cancer death among American men, and about one in eight will be diagnosed in their lifetime. Screening relies on blood levels of prostate-specific antigen... Read more

Extracellular Vesicles Linked to Heart Failure Risk in CKD Patients

Chronic kidney disease (CKD) affects more than 1 in 7 Americans and is strongly associated with cardiovascular complications, which account for more than half of deaths among people with CKD.... Read moreMolecular Diagnostics

view channel

Diagnostic Device Predicts Treatment Response for Brain Tumors Via Blood Test

Glioblastoma is one of the deadliest forms of brain cancer, largely because doctors have no reliable way to determine whether treatments are working in real time. Assessing therapeutic response currently... Read more

Blood Test Detects Early-Stage Cancers by Measuring Epigenetic Instability

Early-stage cancers are notoriously difficult to detect because molecular changes are subtle and often missed by existing screening tools. Many liquid biopsies rely on measuring absolute DNA methylation... Read more

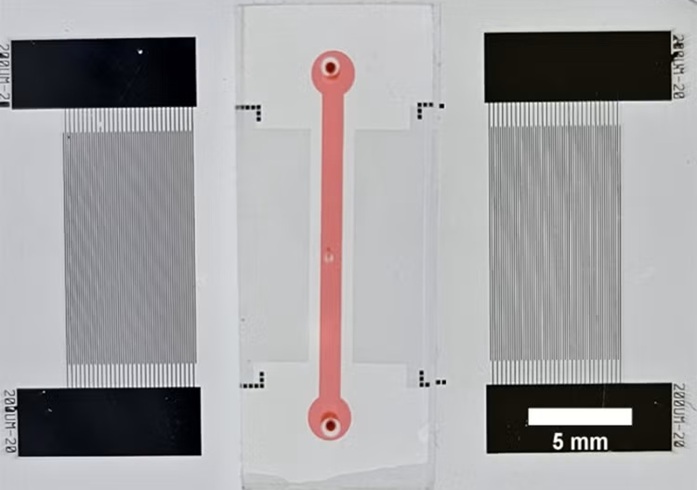

“Lab-On-A-Disc” Device Paves Way for More Automated Liquid Biopsies

Extracellular vesicles (EVs) are tiny particles released by cells into the bloodstream that carry molecular information about a cell’s condition, including whether it is cancerous. However, EVs are highly... Read more

Blood Test Identifies Inflammatory Breast Cancer Patients at Increased Risk of Brain Metastasis

Brain metastasis is a frequent and devastating complication in patients with inflammatory breast cancer, an aggressive subtype with limited treatment options. Despite its high incidence, the biological... Read moreHematology

view channel

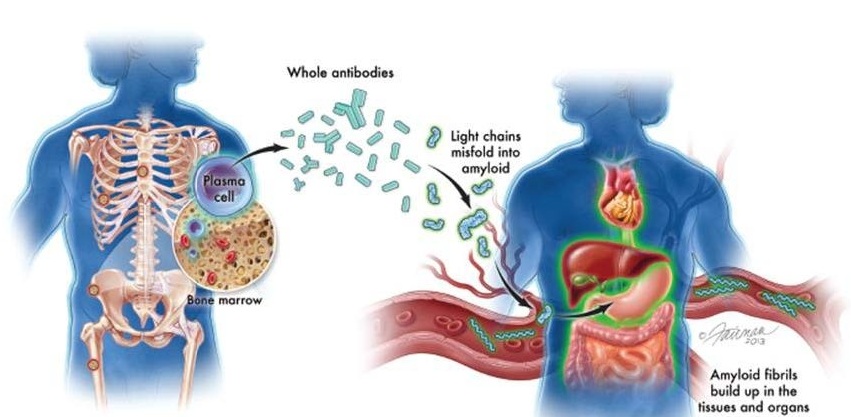

New Guidelines Aim to Improve AL Amyloidosis Diagnosis

Light chain (AL) amyloidosis is a rare, life-threatening bone marrow disorder in which abnormal amyloid proteins accumulate in organs. Approximately 3,260 people in the United States are diagnosed... Read more

Fast and Easy Test Could Revolutionize Blood Transfusions

Blood transfusions are a cornerstone of modern medicine, yet red blood cells can deteriorate quietly while sitting in cold storage for weeks. Although blood units have a fixed expiration date, cells from... Read more

Automated Hemostasis System Helps Labs of All Sizes Optimize Workflow

High-volume hemostasis sections must sustain rapid turnaround while managing reruns and reflex testing. Manual tube handling and preanalytical checks can strain staff time and increase opportunities for error.... Read more

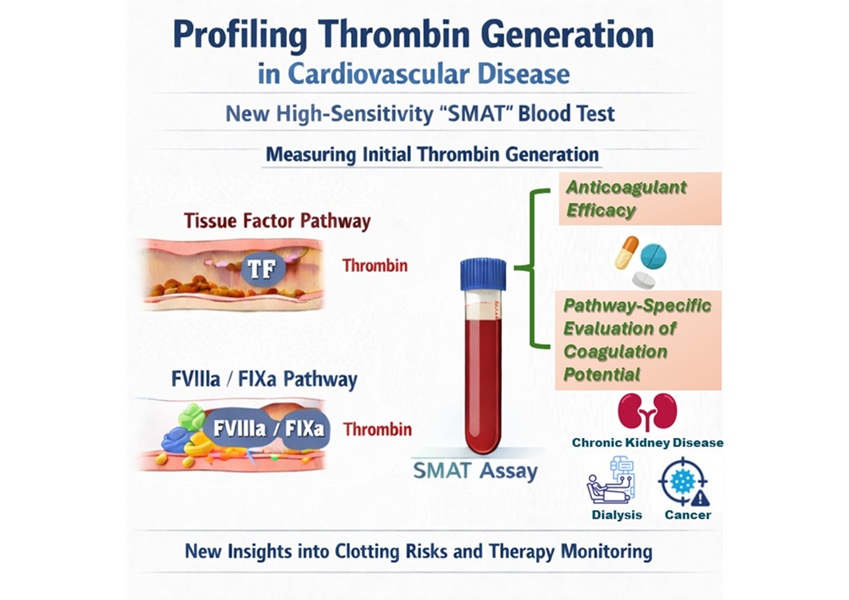

High-Sensitivity Blood Test Improves Assessment of Clotting Risk in Heart Disease Patients

Blood clotting is essential for preventing bleeding, but even small imbalances can lead to serious conditions such as thrombosis or dangerous hemorrhage. In cardiovascular disease, clinicians often struggle... Read moreImmunology

view channelBlood Test Identifies Lung Cancer Patients Who Can Benefit from Immunotherapy Drug

Small cell lung cancer (SCLC) is an aggressive disease with limited treatment options, and even newly approved immunotherapies do not benefit all patients. While immunotherapy can extend survival for some,... Read more

Whole-Genome Sequencing Approach Identifies Cancer Patients Benefitting From PARP-Inhibitor Treatment

Targeted cancer therapies such as PARP inhibitors can be highly effective, but only for patients whose tumors carry specific DNA repair defects. Identifying these patients accurately remains challenging,... Read more

Ultrasensitive Liquid Biopsy Demonstrates Efficacy in Predicting Immunotherapy Response

Immunotherapy has transformed cancer treatment, but only a small proportion of patients experience lasting benefit, with response rates often remaining between 10% and 20%. Clinicians currently lack reliable... Read moreMicrobiology

view channel

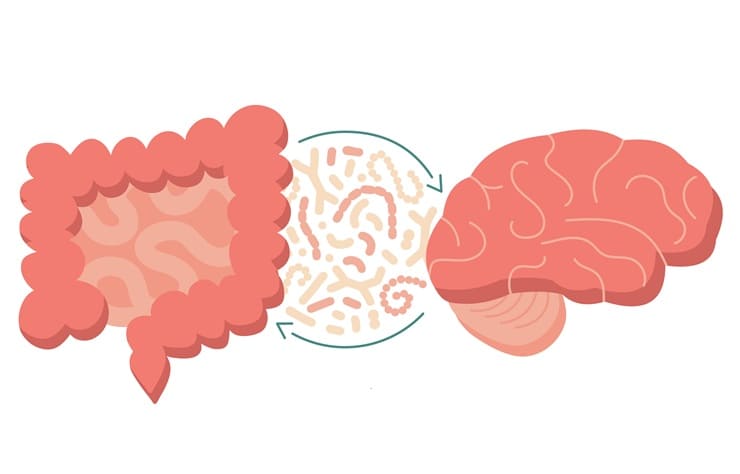

Comprehensive Review Identifies Gut Microbiome Signatures Associated With Alzheimer’s Disease

Alzheimer’s disease affects approximately 6.7 million people in the United States and nearly 50 million worldwide, yet early cognitive decline remains difficult to characterize. Increasing evidence suggests... Read moreAI-Powered Platform Enables Rapid Detection of Drug-Resistant C. Auris Pathogens

Infections caused by the pathogenic yeast Candida auris pose a significant threat to hospitalized patients, particularly those with weakened immune systems or those who have invasive medical devices.... Read morePathology

view channel

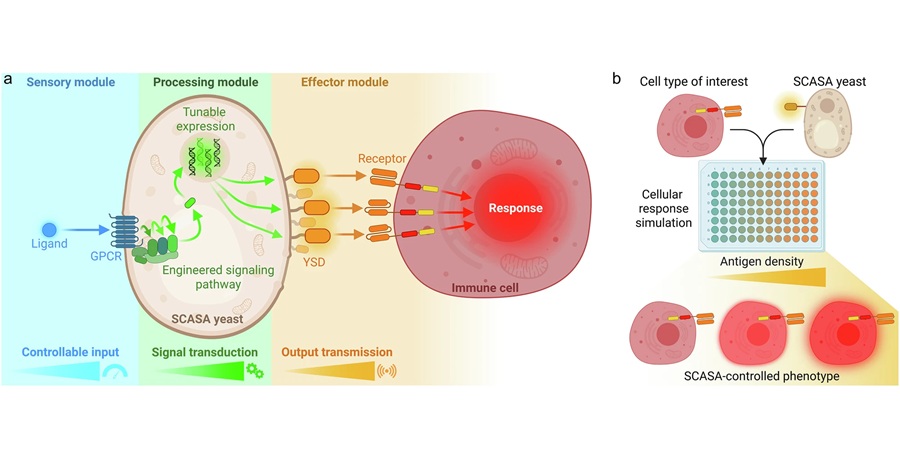

Engineered Yeast Cells Enable Rapid Testing of Cancer Immunotherapy

Developing new cancer immunotherapies is a slow, costly, and high-risk process, particularly for CAR T cell treatments that must precisely recognize cancer-specific antigens. Small differences in tumor... Read more

First-Of-Its-Kind Test Identifies Autism Risk at Birth

Autism spectrum disorder is treatable, and extensive research shows that early intervention can significantly improve cognitive, social, and behavioral outcomes. Yet in the United States, the average age... Read moreTechnology

view channel

Robotic Technology Unveiled for Automated Diagnostic Blood Draws

Routine diagnostic blood collection is a high‑volume task that can strain staffing and introduce human‑dependent variability, with downstream implications for sample quality and patient experience.... Read more

ADLM Launches First-of-Its-Kind Data Science Program for Laboratory Medicine Professionals

Clinical laboratories generate billions of test results each year, creating a treasure trove of data with the potential to support more personalized testing, improve operational efficiency, and enhance patient care.... Read moreAptamer Biosensor Technology to Transform Virus Detection

Rapid and reliable virus detection is essential for controlling outbreaks, from seasonal influenza to global pandemics such as COVID-19. Conventional diagnostic methods, including cell culture, antigen... Read more

AI Models Could Predict Pre-Eclampsia and Anemia Earlier Using Routine Blood Tests

Pre-eclampsia and anemia are major contributors to maternal and child mortality worldwide, together accounting for more than half a million deaths each year and leaving millions with long-term health complications.... Read moreIndustry

view channelNew Collaboration Brings Automated Mass Spectrometry to Routine Laboratory Testing

Mass spectrometry is a powerful analytical technique that identifies and quantifies molecules based on their mass and electrical charge. Its high selectivity, sensitivity, and accuracy make it indispensable... Read more

AI-Powered Cervical Cancer Test Set for Major Rollout in Latin America

Noul Co., a Korean company specializing in AI-based blood and cancer diagnostics, announced it will supply its intelligence (AI)-based miLab CER cervical cancer diagnostic solution to Mexico under a multi‑year... Read more

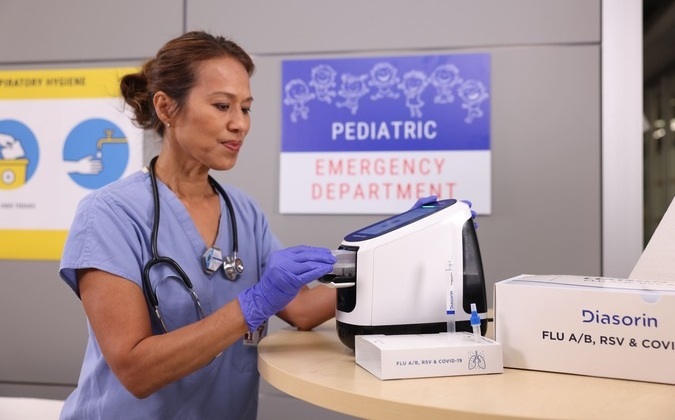

Diasorin and Fisher Scientific Enter into US Distribution Agreement for Molecular POC Platform

Diasorin (Saluggia, Italy) has entered into an exclusive distribution agreement with Fisher Scientific, part of Thermo Fisher Scientific (Waltham, MA, USA), for the LIAISON NES molecular point-of-care... Read more